Google has come up with a raft of announcements, all aimed improving its core product: Search. At its Search On Event, the company made it clear that it will focus on using AI to help its users. Among the new features are a new algorithm to better handle the spelling mistakes in user queries, the ability to detect songs from humming and new tools to help students with homework.

The company also announced updates to Google Lens and other search-related tools.

- Google faces yet another antitrust tussle - this time over smart TVs

- Google buckles, defers in-app commission payment in India to March 2022

Mipsellings' may not matter

The Google event, which was streamed live, was "for sharing several new advancements to search ranking, made possible through our latest research in AI."

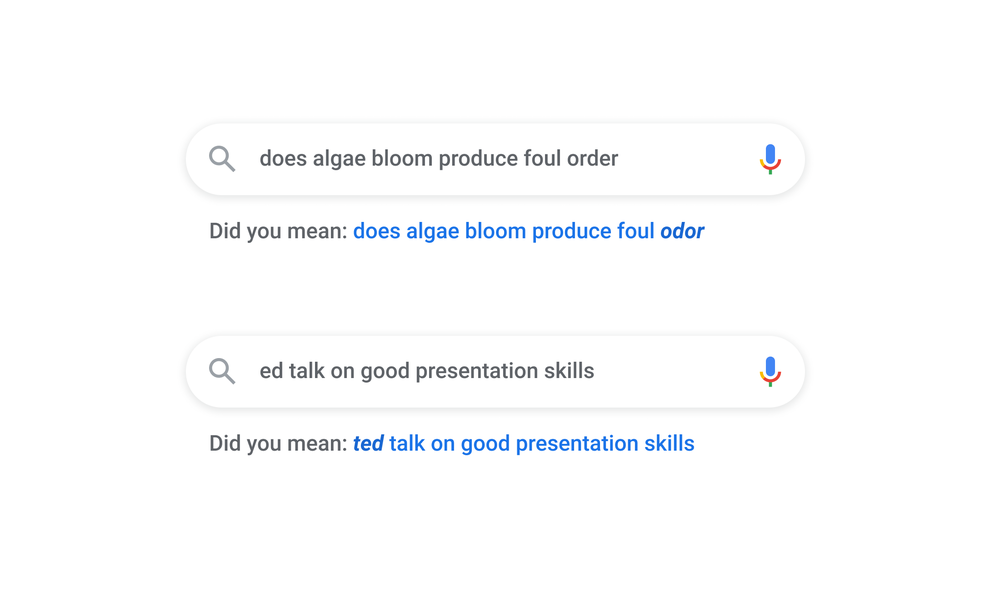

One of the highlights was Google search improving its ability to handle typos. Google said, 1 in 10 queries is misspelled. Even though it is currently addressed by its 'did you mean?' feature, Google is improving on it. "Today, we’re introducing a new spelling algorithm that uses a deep neural net to significantly improve our ability to decipher misspellings. In fact, this single change makes a greater improvement to spelling than all of our improvements over the last five years," Prabhakar Raghavan, Google's Senior Vice President, Search & Assistant, Geo, Ads, Commerce, Payments & NBU said.

The new spelling algorithm helps Google understand the context of misspelled words, so it can help users find the right results, all in under 3 milliseconds.

Google expects its new technology will improve 7 percent of search queries across all languages as it rolls it out globally.

Important data now readily available

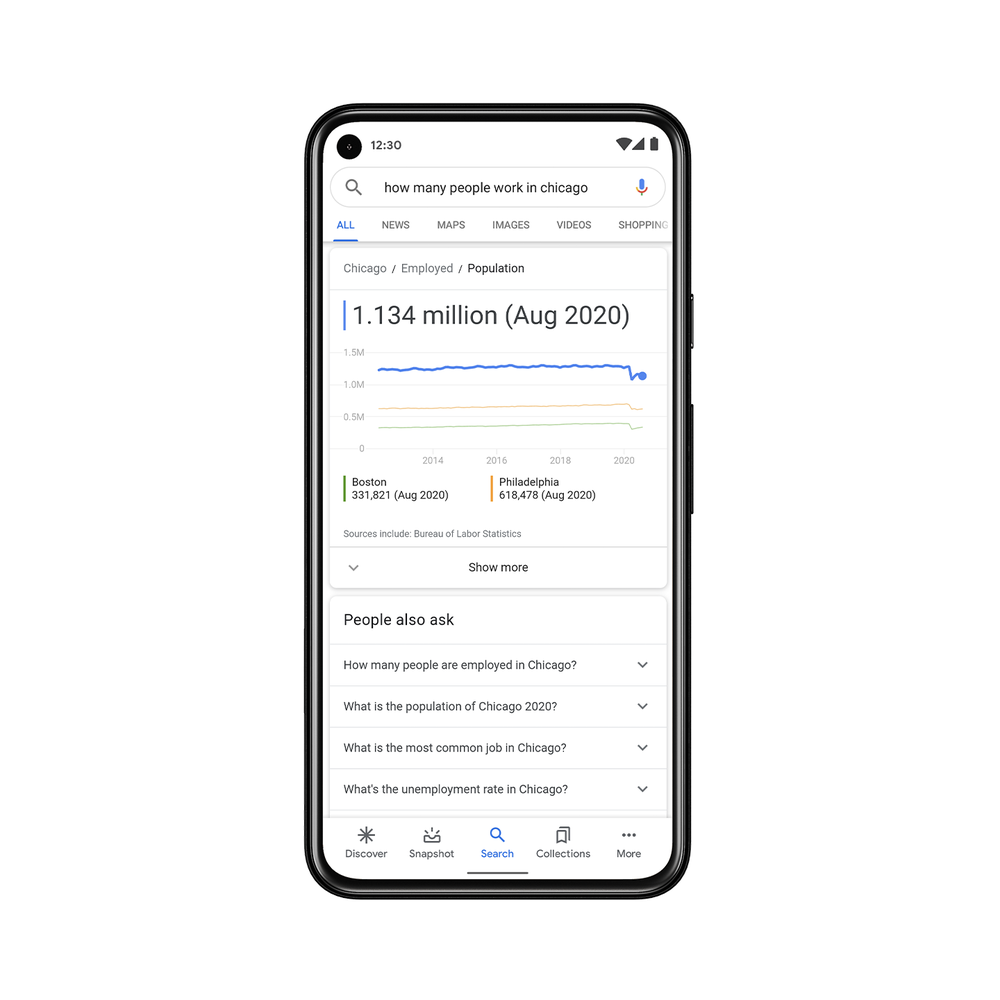

Google is also integrating, and making available, various data sources that were previously only available as part of Google’s Open Data Commons, into Search.

"Now when you ask a question like 'how many people work in Chicago,' we use natural language processing to map your search to one specific set of the billions of data points in Data Commons to provide the right stat in a visual, easy to understand format. You’ll also find other relevant data points and context—like stats for other cities—to help you easily explore the topic in more depth," Google said

Key moments in video content

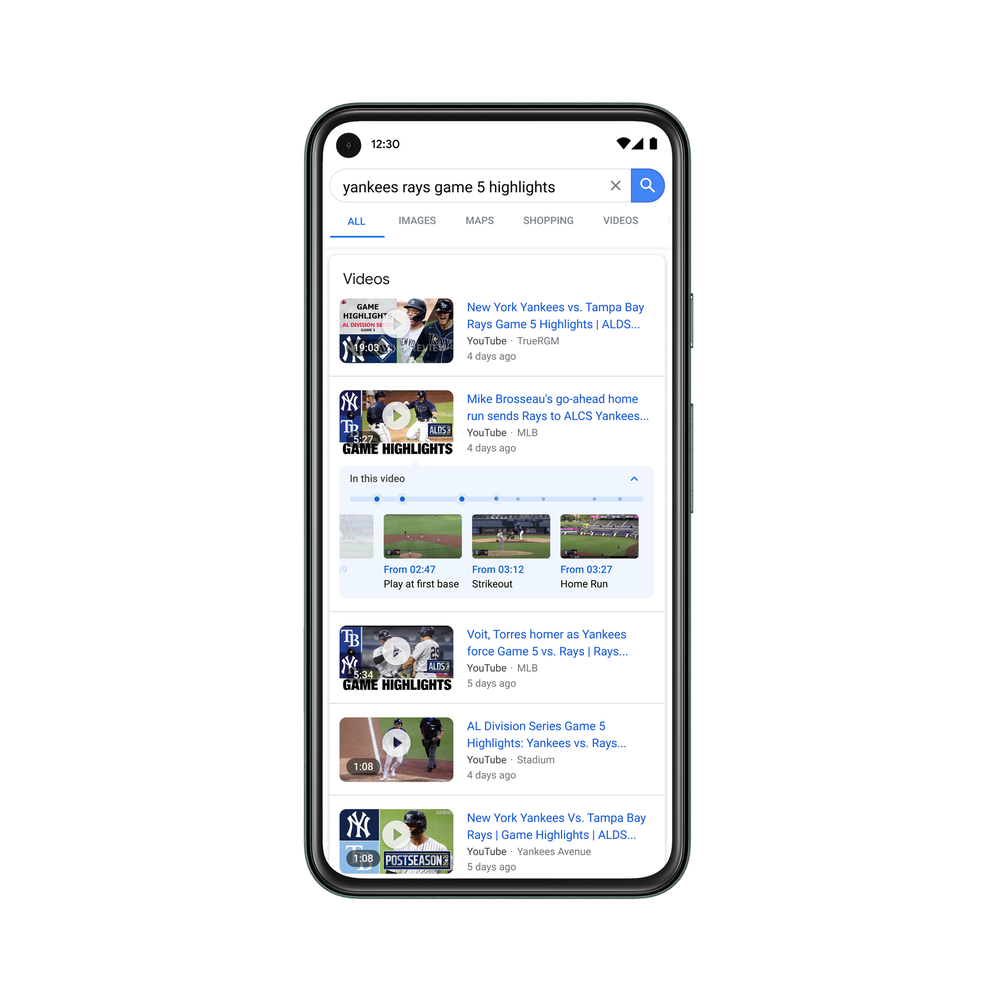

As the world is increasingly embracing video content, Google has come up with advanced computer recognition and speech recognition to tag key moments in videos.

"Using a new AI-driven approach, we’re now able to understand the deep semantics of a video and automatically identify key moments. This lets us tag those moments in the video, so you can navigate them like chapters in a book. Whether you’re looking for that one step in a recipe tutorial, or the game-winning home run in a highlights reel, you can easily find those moments. We’ve started testing this technology this year, and by the end of 2020 we expect that 10 percent of searches on Google will use this new technology."

Hum it and get it

Google said it can now detect voice humming and whistling to show what song a user wants to search. On Google search, users can tap the microphone icon and say “what’s this song?” or click “Search a song,” and start humming for 10-15 seconds. On Google Assistant, users can say, “Hey Google, what’s this song?” and then hum the tune.

Lens lends itself to multiple uses

Another interesting thing is on Google Lens where you can ask the app to read out a passage from a photo of a book — no matter the language. Using Google Lens, users can click on any image they come across, and it in turn will throw up similar items and suggest ways to style outfits.

Using Google Lens feature, users can snap a photo of a homework problem and it will turn the image from homework question into a search query. The results will then show how to solve the problem step by step.

Maps have more on them

On the shopping front, if you're looking for, say, car, Google will now be able to give an AR view so you can see what it looks like in your place. "Social distancing has also dramatically changed how we shop, so we’re making it easier to visually shop for what you’re looking for online, whether you’re looking for a sweater or want a closer look at a new car but can’t visit a showroom," Google said.

On Google Maps, Google will show live info right on the map, so you don’t have to specifically search for it. "We’re also adding COVID-19 safety information front and center on Business Profiles across Google Search and Maps. This will help you know if a business requires you to wear a mask, if you need to make an advance reservation, or if the staff is taking extra safety precautions, like temperature checks. And we’ve used our Duplex conversational technology to help local businesses keep their information up-to-date online, such as opening hours and store inventory," Google said.

For journos, too

As part of Journalist Studio, Google is launching Pinpoint, a new tool that brings the power of Google Search to journalists. "Pinpoint helps reporters quickly sift through hundreds of thousands of documents by automatically identifying and organizing the most frequently mentioned people, organizations and locations. Reporters can sign up to request access to Pinpoint starting this week," Google said.

Source: Google

- Apple iPhone 12 price in India, availability and pre-orders

- Best tech deals and new launches in India this festive season

- Upcoming smartphone launches in India for October: Specs, launch date, price

0 comments:

Post a Comment